- NEWS

- the EDIT

- COMMENTARY

- BUSINESS

- LIFE

- SHOW

- ACTION

- GLOBAL GOALS

- SNAPS

- DYARYO TIRADA

- MORE

Toy makers showcasing products at the Consumer Electronics Show (CES) insisted they are taking precautions to ensure that toys powered by artificial intelligence (AI) remain safe and appropriate for children.

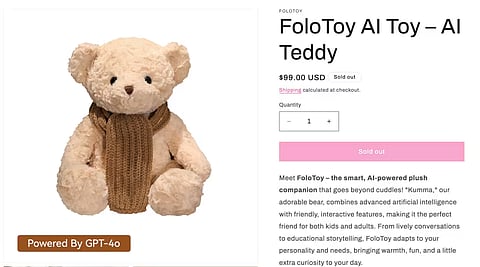

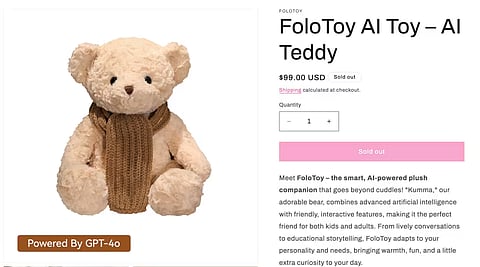

The assurances come after a report by the Public Interest Research Groups (PIRG) found troubling behavior in an AI-powered teddy bear. According to the report, the Kumma teddy bear gave advice related to sex and suggested ways to find a knife when prompted. In one instance, the toy proposed adding a “fun twist” to a relationship and suggested role-playing as an animal. The findings were published in PIRG’s annual report Trouble in Toyland in November 2025.

Following the report and the resulting backlash, Singapore-based startup FoloToy temporarily suspended sales of the Kumma bear. FoloToy chief executive Wang Le told Agence France-Presse (AFP) that the company has since upgraded the AI model originally used in the toy. He said the earlier version generated language that children would not normally use, and expressed confidence that the updated system would now avoid or refuse to answer inappropriate questions.

Toy giant Mattel, meanwhile, did not reference the PIRG report when it announced in mid-December the postponement of its first AI-powered toy developed in partnership with ChatGPT-maker OpenAI.

The rapid growth of generative AI since the rise of ChatGPT has ushered in a new category of “smart toys” for children. One of the toys evaluated by PIRG was Curio’s Grok (not to be confused with X/Twitter's AI assistant of the same name) — a four-legged plush toy inspired by a rocket and sold since 2024. Grok was cited as a strong performer among AI toys, as it consistently refused to answer questions deemed inappropriate for a five-year-old.

The toy also allows parents to override its recommendation algorithm, install their own settings, and review records of the toy’s interactions with children. Curio has received the independent KidSAFE certification, which verifies that a product meets child protection standards.

Despite this, concerns remain. Grok is programmed to constantly listen for questions, raising issues related to privacy and data security. Curio said it is addressing concerns raised in the PIRG report about user data being shared with third-party partners such as OpenAI and Perplexity.

Some companies argue that AI-powered toys can also provide educational benefits. Turkish firm Elaves said its yellow, round toy Sunny will use a chatbot to help children learn languages. Conversations with the toy are designed to be time-limited, guided toward natural endings, and reset regularly to prevent confusion, errors, or overuse — problems commonly associated with extended chatbot interactions.

Olli, a company specializing in AI integration for toys, said its software alerts parents when inappropriate words or phrases are detected during conversations with its AI-powered toys.

For critics and concerned parents, however, these safeguards are not enough. They argue that AI-powered toys should be subject to regulation rather than relying on voluntary measures by manufacturers, citing the need to protect both children and families.

The emergence of AI toys signals a shift in childhood experiences, particularly for children born in 2025 and beyond — often referred to as Generation Beta. Unlike Generation Alpha, which grew up with digital devices and was introduced to AI during its early years, Generation Beta is expected to grow up surrounded by AI-driven toys from infancy. As these products become more common, questions continue to be raised about their long-term effects on child development.